Linear Attention Overview

Linear Attention Let’s start by looking at the standard attention formula: $$ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V $$ Where: $V$ is the value matrix representing meanings in the sequence, dimensions $n \times d_v$ $K$ is the key matrix representing indexes/locations of the values, dimensions $n \times d_k$ $Q$ is the query matrix representing information we currently need, dimensions $n \times d_k$ $d_k$ is the dimension of the key vectors The output is a weighted sum of the value vectors $V$, where the weights are determined by the attention scores....

Human-like LLMs

Code Introduction I like to start my posts by clearly stating the questions that drove me to write them, I think it’s always good to keep the motivation in mind, so here is this time’s question: Given that LLM detectors exist, and that many of them claim reasonable accuracy, surely we can train an LLM to beat those detectors, right? And if so, does it mean that we can train an LLM to be human-like?...

SVGFusion-mini: Approaches & Limitations of Generative Diffusion for SVGs

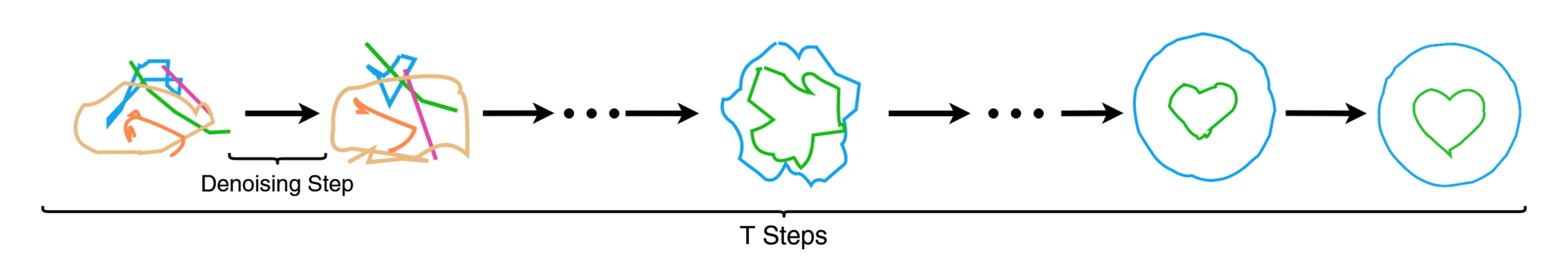

Code Thesis Introduction I did talk at length before about diffusion and also about SVGs, but not how to combine both. If you want to gain an understanding of data generation using diffusion, you can read my post here. Alternatively, if you’re interested in manipulating SVGs, you can check out my other post here. In a sense, this post now is the culmination of both of them. If you’re not familiar with either topic, I recommend skimming through the other posts first....

What is Diffusion?

Introduction Diffusion Models, the class of AI models which includes Stable Diffusion and DALL-E2 among others, rely on the simple yet powerful concept of diffusion. But what, exactly, is diffusion? And why is it so important that it became the core of most of the new fancy generative models? Are the two questions that I will try to talk about in this post. The Theory of Diffusion Definition The word Diffusion is defined as a “process […] by which there is a net flow of matter from a region of high concentration to a region of low concentration” ( Citation: Encyclopedia Britannica, Encyclopedia Britannica (s....

On Trusting Trust

Introduction I was asked a while ago by a junior about using ChatGPT for programming and if it’s a good idea or not, which reminded me of why you should, as a rule, distrust technology. Aside from ChatGPT, which you obviously shouldn’t rely on for programming by the way (despite what the internet wants you to believe), technology always has a bias, sometimes a malicious bias even, that I like to remind people of, so let’s dedicate this post to that....